The Tragedy May Be Real. The Photos Are Not.

Photos of the Texas flood victims show AI artifacts. News outlets published them anyway.

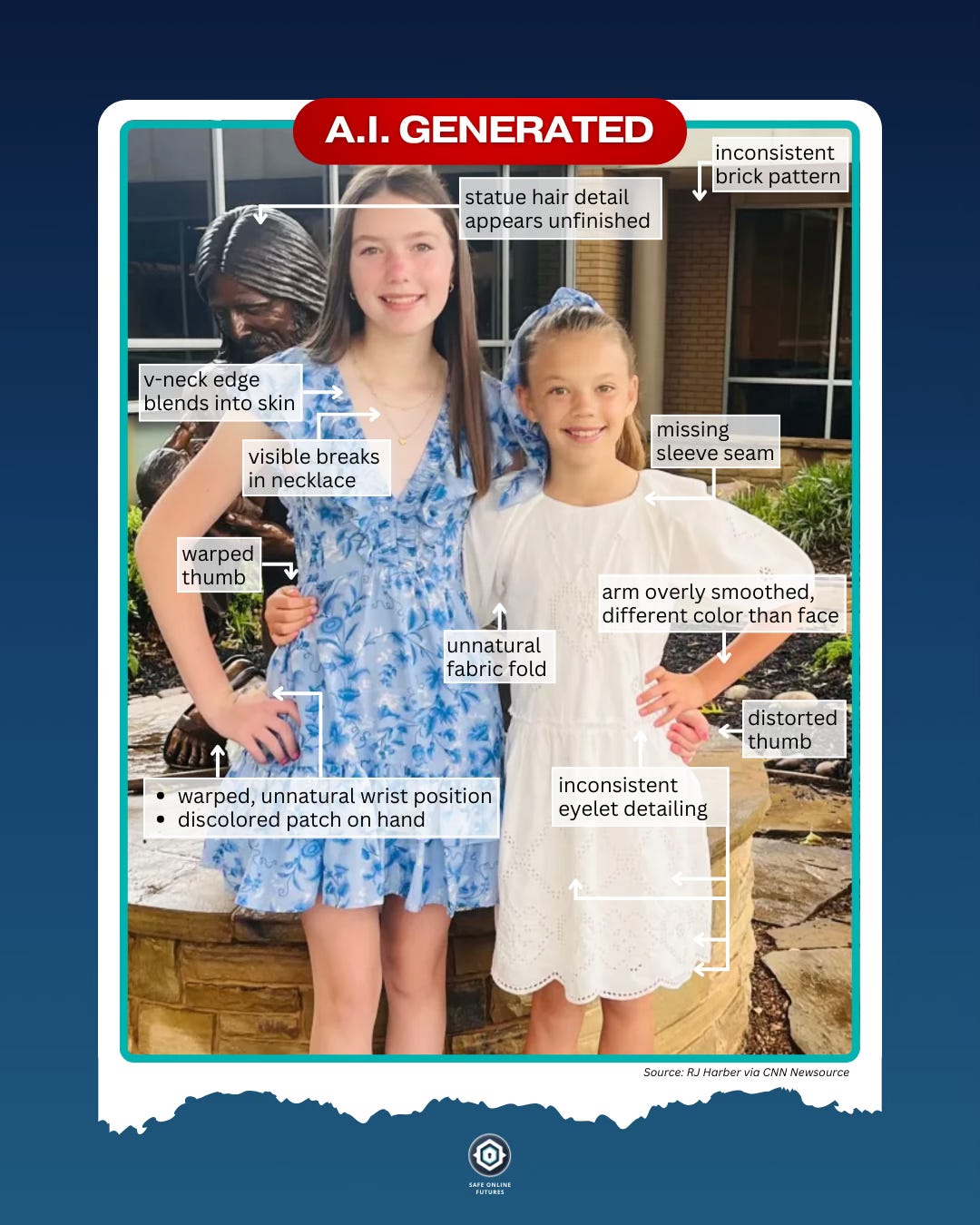

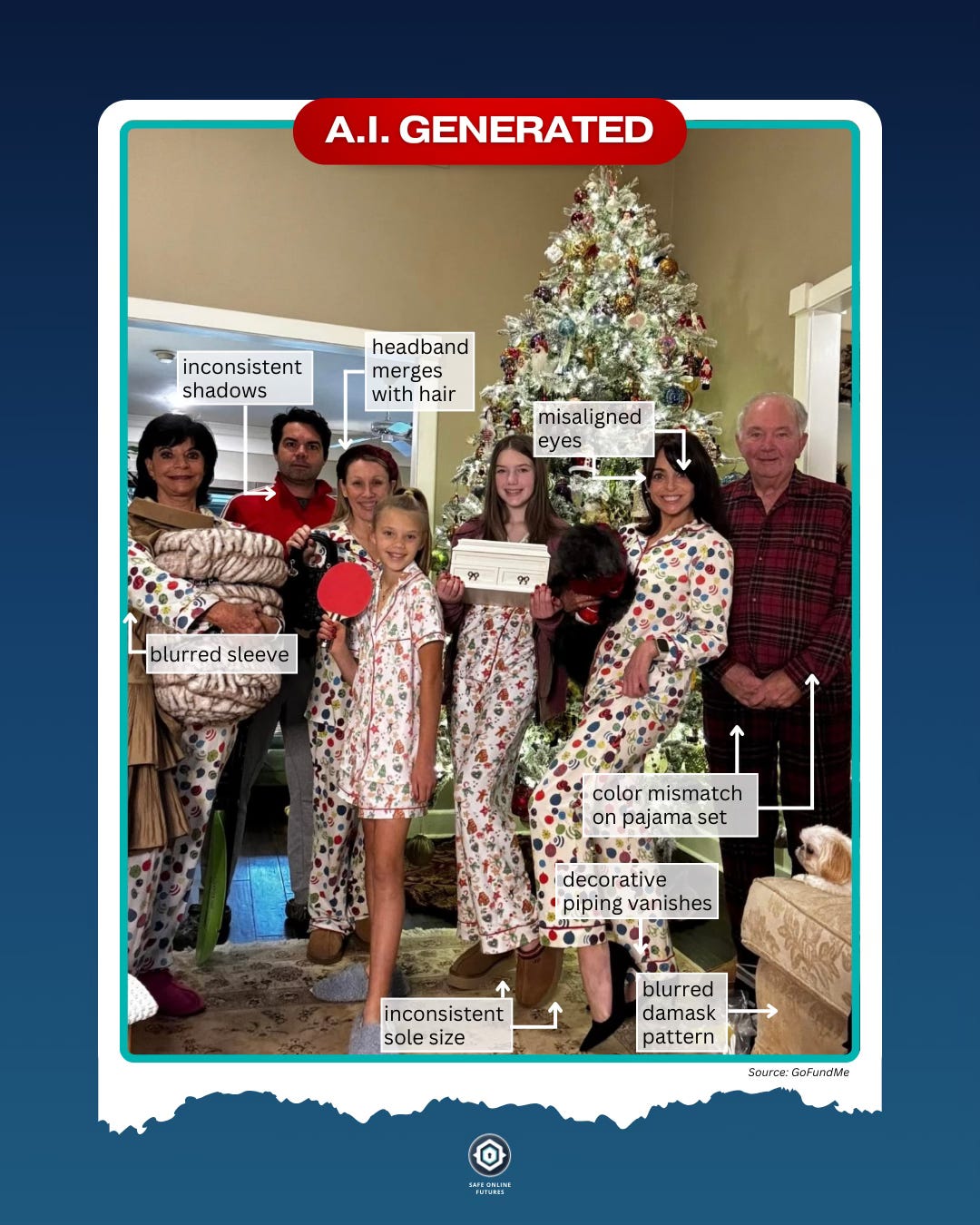

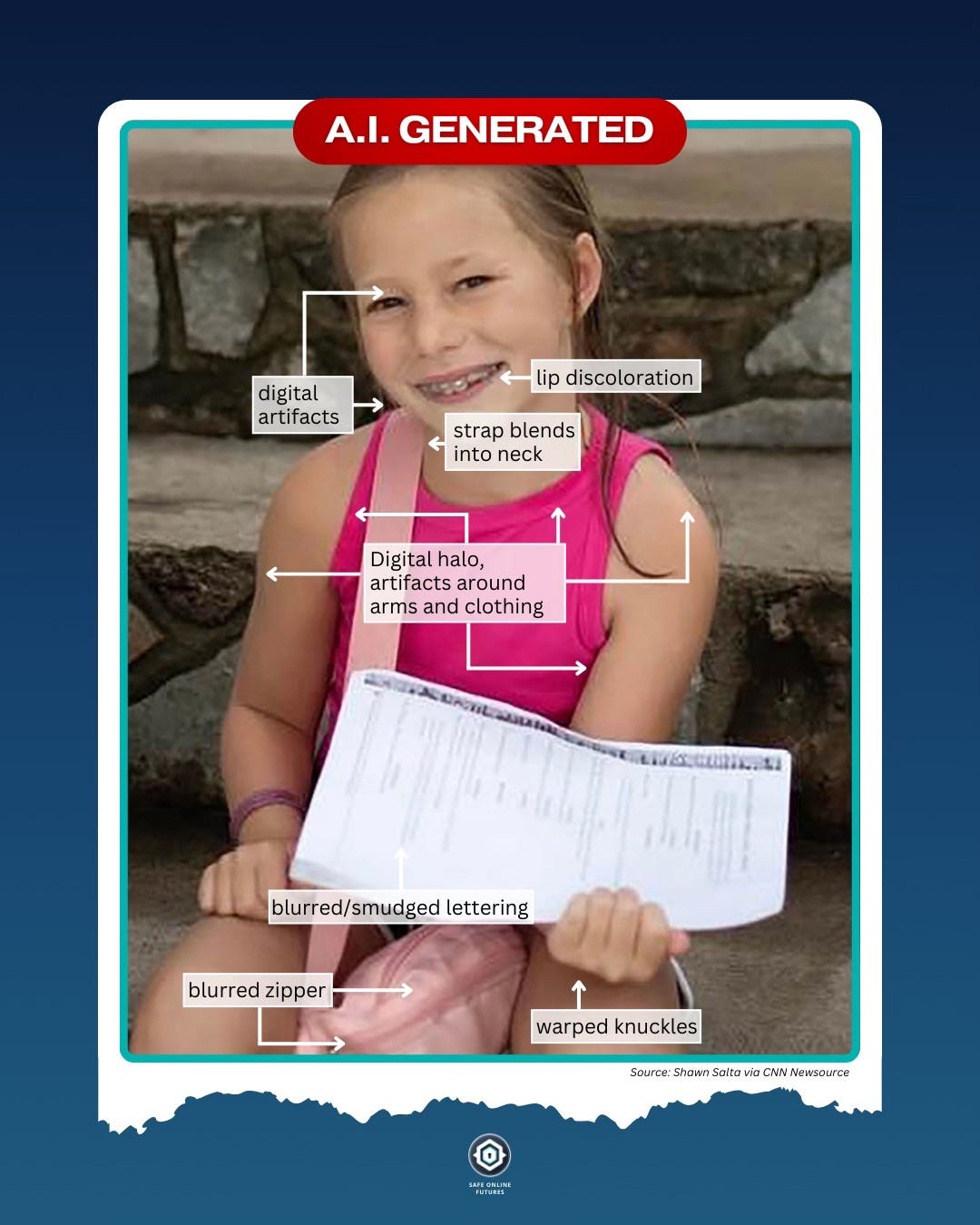

The memorial photos from the Texas floods looked ordinary at first glance. Major outlets like CBS, People, and even local affiliates, ran images that appeared to be standard family snapshots. But zoom in, examine the details closely, and the problems become visible: warped facial features, unnatural skin and hair patching, anatomical distortions that shouldn't exist in real photography.

These weren't crude deepfakes or obvious fakes. They were images that passed casual inspection but fell apart under scrutiny. Not all of them showed these signs, but enough to establish a troubling pattern.

The families were credited as sources. Some of the photos even appeared in social media tributes and a GoFundMe campaign that has raised over $350,000 to date. Yet under closer inspection, the images revealed troubling inconsistencies: warped facial symmetry, pattern warps, inconsistent fabric textures, and anatomical impossibilities that don't occur in conventional photography. These artifacts are unmistakable once you know what to look for.

The Photos Are Credited to Family Members. But Something's Off.

News outlets consistently cite these images as having come directly from the victim's loved ones, with captions like "photo courtesy of the family" or "via GoFundMe." But the visual evidence tells a different story.

Some of the most widely shared photos show classic signs of AI generation or enhancement: unnatural skin textures, inconsistent lighting, flattened expressions, and eyes that don't quite align correctly. Others feature bizarre anatomical issues like warped appendages and distorted hairlines that don't occur in standard photography.

There are several plausible explanations:

A family member may have used an AI photo enhancer, like Remini, FaceApp, or Photoshop Generative Fill, to "improve" an old or blurry photo, not realizing those tools hallucinate details rather than simply sharpen existing ones.

In moments of grief, families might rely on others — friends, fundraisers, or volunteers — to manage tribute images. These intermediaries may insert stylized or AI-generated photos into the mix without disclosure.

In some cases, the photos may have originated from a social media post or fundraiser already using AI-generated content for visual impact.

And in the worst-case scenarios, someone may have fabricated or manipulated an image entirely and passed it off as real — to harvest sympathy, raise money, or amplify a narrative.

We've seen this playbook before. Following the June 2025 shootings of Minnesota legislators, multiple tribute photos — some credited to family members, others sourced from the victim's alleged social media — showed clear signs of AI generation. Still, the media ran them uncritically, assuming authenticity without verification.

Why Detection Tools Are Worthless

Most journalists and editors, faced with suspicious imagery, turn to online AI detection tools. These services consistently fail where it matters most. I tested the Texas flood photos through multiple detection platforms. The verdict was nearly universal: "Likely real" or "Highly unlikely to be AI-generated."

This isn't surprising. Current detection tools were designed to identify fully synthetic images from models like Midjourney or DALL-E. They struggle with hybrid content — real photos enhanced or modified with AI — which represents the majority of synthetic media in circulation today.

The failure rate is documented. PetaPixel found that Optic's detection tool misidentified authentic war photography as AI-generated due to compression artifacts. A Washington Post investigation revealed that even sophisticated detection services used by major platforms were "largely untested" and routinely failed in real-world applications.

These tools are calibrated to minimize false positives, meaning they default to "real" unless confronted with obvious synthetic markers. This makes them worse than useless — they provide false confidence in authenticity when visual analysis suggests otherwise.

Editorial Failure at Scale

The media's handling of these images represents a fundamental breakdown in editorial standards. Three scenarios explain what we're witnessing:

Scenario One: News outlets didn't verify the images before publication. This constitutes gross negligence, particularly when the images are central to public emotional response and fundraising efforts.

Scenario Two: Editors recognized the signs of AI manipulation but published anyway, quietly normalizing synthetic grief as acceptable journalistic content.

Scenario Three: Editorial teams relied on flawed AI detection tools, outsourcing their judgment to software that fails regularly in professional settings.

In each case, the public is being misled. When we see an image of a deceased child, we don't process it as mere information — we absorb it emotionally. That image becomes our memory of the tragedy. If it's been algorithmically enhanced or reconstructed, then so has our understanding of the loss itself.

The Regulatory Void

This problem persists because there's no legal framework requiring disclosure of AI-generated or AI-manipulated content. No standards for image verification before publication. No transparency requirements for photos involving real victims and real grief.

The infrastructure of truth is crumbling while the tools of deception become more sophisticated.

We're witnessing the quiet dissolution of the boundary between authentic and artificial in the most sensitive contexts imaginable.

What I've Learned

After documenting signs of AI in media for the past year, here's what I've learned:

Your visual instincts are more reliable than any publicly available detection tool. If something looks wrong, investigate further. Perfect symmetry, unnatural skin textures, and anatomical inconsistencies are red flags that shouldn't be ignored.

The media must establish verification protocols for sensitive imagery. Source attribution is insufficient when the technology to create convincing fakes is freely available.

We need legislative frameworks that require disclosure of AI-generated or AI-enhanced content, particularly in news contexts and fundraising campaigns.

The Path Forward

The technology will only become more sophisticated. The tools will become more accessible. The line between real and artificial will continue to blur unless we establish clear standards and accountability measures.

This isn't about perfect detection — it's about honest disclosure. If an image has been enhanced, altered, or generated with AI, the public deserves to know. Especially when that image is being used to shape our understanding of tragedy and loss.

We're at a crossroads. We can continue allowing synthetic content to infiltrate our most sacred spaces — grief, remembrance, truth-telling — or we can demand the infrastructure necessary to preserve the distinction between what happened and what algorithms think should have happened.

The choice is ours. But we need to make it soon, before the technology makes it for us.

See more photos from the Texas floods that show signs of AI. View the full photo breakdown on Instagram / Facebook / TikTok / LinkedIn.

Want to stay ahead of the curve on AI deception and digital manipulation tactics?

Subscribe for weekly insights that help you see through the illusion.